When the EU's Artificial Intelligence Act (AIA) came into force on August 1, 2024, a new era of artificial intelligence regulation began in Europe. The focus is on prohibited AI systems and high-risk AI systems (HR AI). In this article, which is based on an informative summary by the VDE, you will find out what requirements providers and operators will face from 2025 onwards.

Gradual introduction defines the roadmap

The various parts of the AIA will be introduced gradually:

- For example, the general provisions have already been in force and prohibited practices defined since February 2, 2025

- The provisions for high-risk systems (HR), governance and the type of sanctions, among others, have been in force since August 2, 2025

- Most other provisions from August 2, 2026

- Classification rules for high-risk AI systems from August 2, 2027

What is an AI system?

The AIA defines an AI system as a machine-based system that is designed to operate with varying degrees of autonomy and adaptability and that derives from the inputs it receives for explicit or implicit goals how it can generate outputs (predictions, content, recommendations or decisions) that can influence the physical or virtual environment.

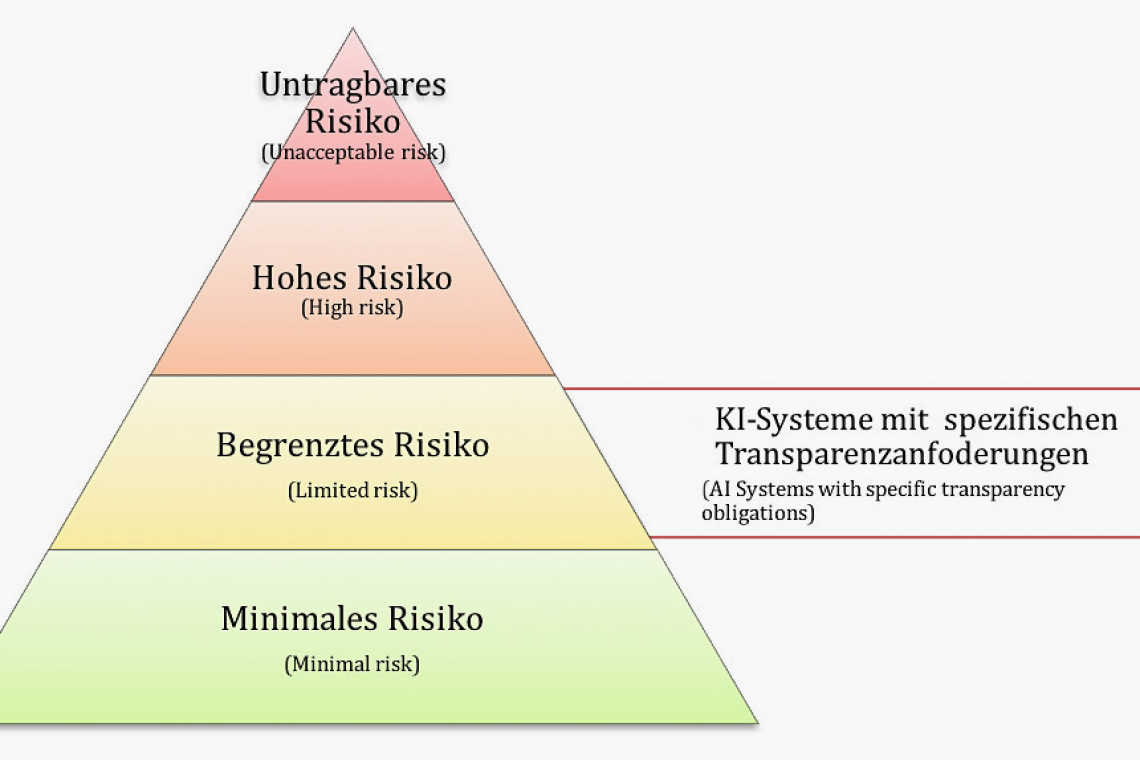

Classification by risk

AI systems are classified as follows:

- Unacceptable risk in relation to eight prohibited practices, which are explained in AIA Art. 5

- High risk according to Art. 6 AIA, these AI systems are subject to conformity assessment procedures by third parties as with medical devices

- Limited risk with special transparency requirements (Art. 50 AEOI), e.g. chatbots

- Minimal or no risk, for which providers should comply with voluntary codes of conduct (Art. 95 AIA), e.g. video games or spam filters

Special case of AI systems with a general purpose

Such a system is "an AI system that is based on a general-purpose AI model and is capable of serving a variety of purposes both for direct use and for integration into other AI systems".

Chapter 5 of the AIA sets out the general obligations of providers of such AI, e.g. the creation of technical documentation or the provision of information to other providers.

Safe development and use of high-risk AI systems

Since February 2, 2025, providers and operators of HR AI have been obliged to develop sufficient AI competence. Operators should ensure that the persons assigned to implement the operating instructions and supervision have an appropriate level of AI competence, knowledge and authority to perform these tasks.

Since February 2, 2025, providers and operators of HR AI have been obliged to develop sufficient AI competence. Operators should ensure that the persons assigned to implement the operating instructions and supervision have an appropriate level of AI competence, knowledge and authority to perform these tasks.

What do providers of high-risk AI systems need to do now?

As a basis for the safety and performance of HR AI, a risk management and quality management system must be established, continuously developed and maintained, with simplified provision of technical documentation for SMEs and start-ups.

Providers must, for example, ensure the traceability of system functions (processes and events) and post-market monitoring, including by automatically recording events during the life cycle.

Furthermore, the legislator provides for extensive transparency obligations and retention periods. For AI systems that are intended for direct interaction with natural persons, for example, it is required that the persons are informed that they are interacting with an AI system. A list of the requirements can be found on the VDE website.

Conformity assessment

Depending on the application, a conformity assessment of the HR AI must also be carried out.

HR AI providers issue a written, machine-readable, physically or electronically signed EU declaration of conformity "covering all Union legislation applicable to the high-risk AI system".

What are the duties of operators of high-risk AI systems?

The obligations for operators of HR AI are summarized in Art. 26 of the AIA. Among other things, they must take appropriate measures to ensure that they use such systems in accordance with the accompanying operating instructions. The requirements for human supervision must also be implemented based on the technical implementation by the provider. Operators have obligations to report serious incidents to suppliers, importers, distributors and market surveillance authorities. Other requirements include informing employee representatives and affected employees about the use of HR AI and the obligation to carry out a data protection impact assessment.

In the case of learning AI systems, the HR AI is subject to a new conformity assessment procedure in the event of a significant change, regardless of whether the modified system is still to be placed on the market or continued to be used by the current operator.

Preparation for the AIA by providers and operators

The establishment of AI laboratories is intended to enable providers to develop, train, test and validate AI systems for a certain period of time before they are placed on the market. In addition, providers can, under certain conditions, test their systems under real conditions outside the AI real laboratories.

It is clear that providers and operators of AI systems must build up the relevant skills among their staff at an early stage. Providers of HR AI should already take into account the increased effort in technical documentation and quality management in terms of organizational and financial resources.

www.vde.com/topics-de/kuenstliche-intelligenz/news/ki-systeme-eu-artificial-intelligence-act, https://eur-lex.europa.eu/eli/reg/2024/1689/oj