As part of a graduation project, a system was developed to train Convolutional Neural Networks (CNN) to reliably recognize electronic components in X-ray images. The concept of transfer learning is used here. A procedure was designed to generate suitable training data sets. This made it possible to train and evaluate various network architectures for image classification and object detection. Freely available models from another domain were used for transfer learning. The results show that transfer learning is a suitable method for reducing the effort (time, data volume) involved in creating classification and object detector models. The created models show a high accuracy in classification and object detection even with unknown data. Applications related to the evaluation of radiographic images of electronic assemblies can thus be designed more effectively.

Part 1 of this issue introduces the problem and deals with object recognition using neural networks.

As part of a graduation thesis, a system was developed with which convolutional neural networks (CNN) can be trained to reliably identify electronic components in X-ray images. The concept of transfer learning is used to train the CNNs. A procedure was designed and implemented to generate suitable training data sets. With the help of the training data sets, different network architectures for image classification and object detection could be trained and evaluated. For the transfer learning, freely available models from another domain were used. The results show that transfer learning is a suitable method to reduce the effort (time, amount of data) in creating classification and object detector models. The models created show a high degree of accuracy in classification and object detection, even with unknown data. Applications that are related to the evaluation of radiographic images of electronic assemblies can be designed more effectively thanks to the available knowledge.

Part 1 in this issue introduces the problem and deals with object recognition using neural networks.

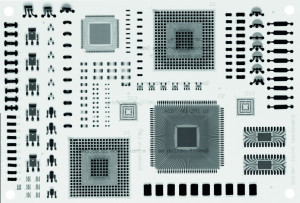

Fig. 1: Left: the test assembly (phoenix x-ray), right: the corresponding X-ray image

Motivation

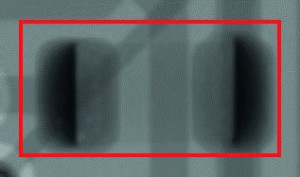

Non-destructive testing and evaluation of electronic assemblies and components is a sub-area of assembly and connection technology (AVT). X-ray imaging is often used to visualize hidden structures when examining the solder joints of ball grid arrays (BGA) or packaged modules with unknown components. However, since X-ray images of the examined components or assemblies differ significantly from their external appearance, it can sometimes be difficult for the observer to identify structures and additionally determine whether they have faults or other abnormalities. Figure 1 shows the differences using a practical example in the form of a test assembly. The various components can be differentiated from each other in their external appearance by means of a photo or simply by looking at them based on their contour, labeling and coloring. However, these object features cannot usually be identified using radiography. However, it does reveal new information about the inside of components and hidden solder joints.

At first glance, a BGA often only appears as a defined collection of many round solder contacts in the X-ray image. On closer inspection, bonding wires or flip-chip contacts or the interposer with vias can also be recognized as characteristic features. The ability to recognize specific properties can be extended to many other package types, but requires a basic knowledge of the internal structure.

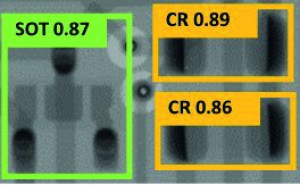

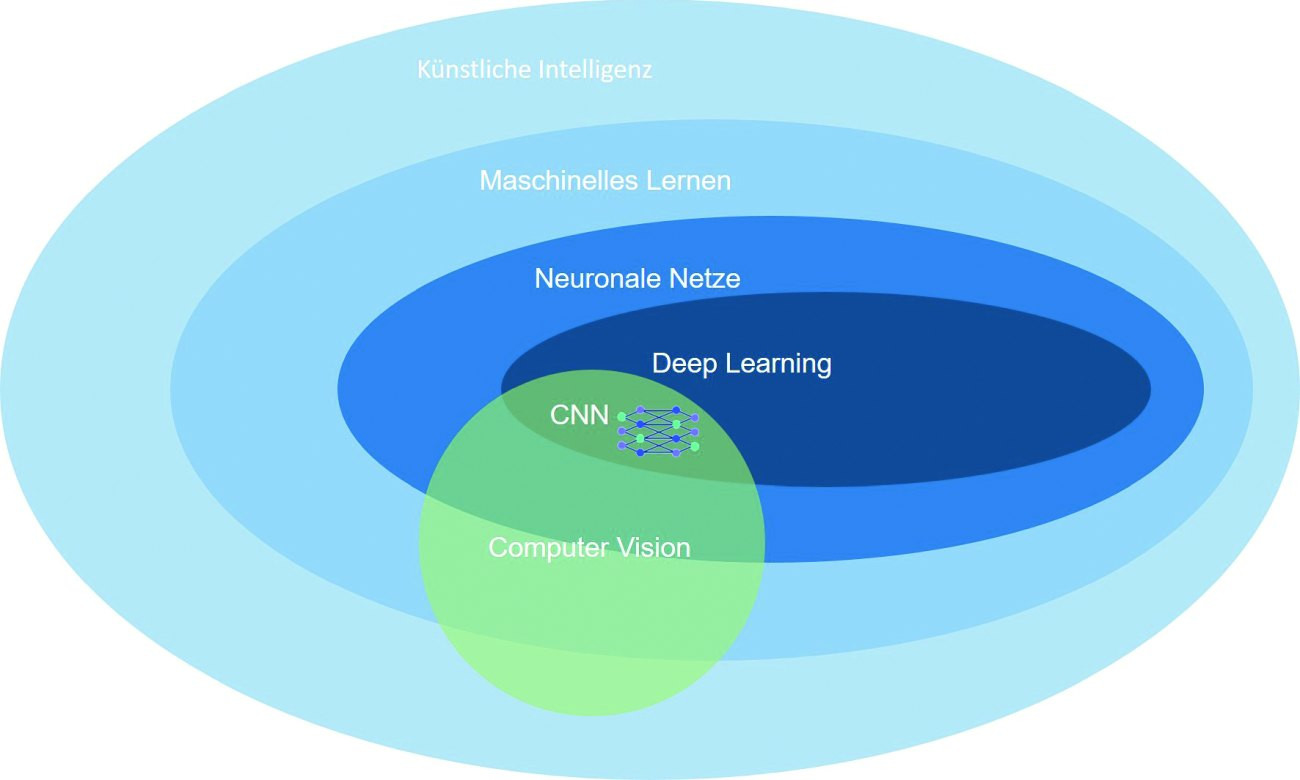

Fig. 2: CNN - classification in the world of AI

Fig. 2: CNN - classification in the world of AI

This is where the idea of automatically enriching images with additional information that makes it easier for the viewer to recognize and evaluate them comes from. This can improve the evaluation of X-ray images of electronic assemblies in the context of quality assurance, but especially in troubleshooting. Up to now, this has mostly been a time-consuming manual process in which the individual experience of the evaluator plays a decisive role. As part of a graduation project, a system was developed at TU Dresden with which convolutional neural networks (CNN) can be trained to reliably recognize electronic components in X-ray images. The key findings and results of this work are presented in this article. They show that the concept of transfer learning is a suitable method for reducing the effort (time, amount of data) in the creation of classification and object detector models. The created models show a high accuracy in classification and object detection even with unknown initial data.

1 Introduction

Automatic object recognition in images is a current topic and is undergoing rapid development through the use of machine learning (ML) methods [1]. In this context, methods based on the use of CNNs are used in particular. CNNs can be understood as a sub-area of the concept of artificial intelligence (AI). For a better understanding of the terms relating to AI, it is important to describe and differentiate between some of them in more detail. AI generally deals with the automation of intelligent behavior. The aim is to replicate human decision-making structures or to process problems independently. ML is a sub-area of AI and encompasses the task of artificially generating knowledge from experience. For this purpose, recurring patterns are recognized in existing data sets, generalized and applied to the analysis of unknown data. Deep learning (DL) is in turn a sub-area of ML or a special method of data processing using multilayer (deep) neural networks. DL systems can imitate human information processing and can learn without external guidance. DL is particularly suitable for pattern recognition from very large data sets. CNNs belong to the field of DL. Another important sub-area is machine vision or computer vision, which can identify objects in digital images and extract and process relevant information [2]. Figure 2 provides a compact illustration of this form of categorization.

The training of neural networks generally requires a large amount of annotated data sets in order to recognize underlying structures and patterns. However, this poses a particular difficulty in the present context of testing and assessing electronic assemblies and components, as no suitable data sets are currently available. The creation of a data set is in turn the most complex and expensive process in the design of such systems. The concept of transfer learning (TL) is presented to address this problem. At least in theory, this should make it possible to significantly reduce the amount of data required. Similar to humans, 'knowledge' about structures can be transferred from one domain to another. This is comparable to learning a new language, for example. In the context of image processing with CNNs, the similarity of the image structure is utilized. An image can be seen as a collection of many corners, edges, circles, colors, etc.. These rough structures form shapes, and the combination of shapes forms objects. It is known that CNNs process images in this way, initially extracting very coarse features which then grow into specific features. Today, a large number of different models are available for the image processing of everyday objects, which can be used directly. However, there are significant differences between X-ray images and photographic images (gray scale, type of image, etc.). It was therefore necessary to investigate whether and how well these models can be transferred to the context of radiography. In general, a distinction is made between image classification and object detection. Suitable data sets were developed and implemented with relatively little effort on the basis of a (semi-)automatic generation of assemblies and annotation based on design data.

2 Object recognition in general

The term 'object recognition' can generally be understood to mean different tasks in computer-aided image processing and can be categorized according to their complexity as shown in Table 1.

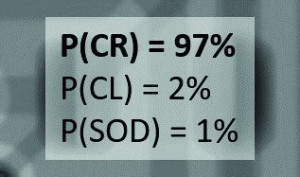

The first task is 'classification'. This involves assigning a label with the most probable class to the input image. If the image contains several objects, only one label will be output. For this reason, only one object should be included in the image if possible. The following example of the classification task shows the output for an object which is a chip resistor (CR) with a probability of 97% (P).

The second task is a 'classification and localization'. Here, in addition to the label of the most probable class, the position of the surrounding rectangle is also output. In this case, too, only one object can be processed.

The third and most complex task is 'object detection'. The aim here is to determine which object is located at which position in the image. The input image can contain any number of objects from different classes.

The mode of operation of an object detector generally comprises two working modes. The first mode is often referred to as training and is used to create a model of the objects or object classes being searched for. The second mode is called inference. In this mode, the detector attempts to evaluate the input data using the model.

In most cases, object recognition in image data takes place in two to three steps. In the first step, the input data is pre-processed. This may involve resizing, converting to a different data format or changing the color space, for example. In the second step, object detection takes place. The image is segmented and regions of interest (RoI) are determined. In the third step, the algorithm classifies the regions or objects (image sections). These are compared with a model to determine the degree of correspondence. The data model forms the basis for the quality of the classification.

The preparation of this data model (training) works in a similar way to the path of inference. For a good model, a large number of annotated data with a high degree of variation is required. The larger the underlying data set, the more robust and precise the result of the classification. There are different methods with which an object detector can be realized. These include 'conventional' methods, which include methods based on template matching or feature recognition. However, these are only mentioned for the sake of completeness and will not be considered further below.

With the publication of AlexNet in 2012, methods based on neural networks came into focus [3]. These 'new' methods deliver significantly more precise results. The novelty of these methods lies in the adaptive learning process. The developer does not have to define any features of the objects to be examined, as the model independently recognizes the underlying structures.

3 Object recognition with CNN

As already mentioned at the beginning, CNNs are increasingly being used for automatic object recognition in images. These have an input layer, an output layer and any number of 'hidden' layers. The hidden layers fulfill several functions and consist of different modules. These modules include the so-called convolutional layers. In these, filter kernels (operators) are applied to the image data with a convolution operation (convolution) in order to extract features. The convolution operation corresponds to a matrix multiplication with a feature map as the result. Pooling layers are also used to condense this information. Max pooling is frequently used. This involves a maximum operator of a certain size (kernel size) and stride. The more complex the recognition task, the greater the number of layers between input and output. This is referred to as the depth (sequential structures) and width (parallel structures) of a network. In addition to the design of detector architectures, the design of such networks is the subject of current research and development.

![Abb. 3: Beispielhafte Darstellung der Ergebnisse (Feature Maps) der fünf Convolution-Blöcke eines VGG-16 (VGG16 ist eine von 6 vortrainierten Architekturen von VGG Net – Visual Geometry Group – mit 16 gewichteten Layern). Unter den Grafiken sind die jeweiligen Auflösungen und Anzahlen der Einzelbilder dargestellt [4] Abb. 3: Beispielhafte Darstellung der Ergebnisse (Feature Maps) der fünf Convolution-Blöcke eines VGG-16 (VGG16 ist eine von 6 vortrainierten Architekturen von VGG Net – Visual Geometry Group – mit 16 gewichteten Layern). Unter den Grafiken sind die jeweiligen Auflösungen und Anzahlen der Einzelbilder dargestellt [4]](/images/stories/Abo-2022-10/plus-2022-10-1011.jpg) Fig. 3: Exemplary representation of the results (feature maps) of the five convolution blocks of a VGG-16 (VGG16 is one of 6 pre-trained architectures of VGG Net - Visual Geometry Group - with 16 weighted layers). The respective resolutions and numbers of individual images are shown below the graphics [4]

Fig. 3: Exemplary representation of the results (feature maps) of the five convolution blocks of a VGG-16 (VGG16 is one of 6 pre-trained architectures of VGG Net - Visual Geometry Group - with 16 weighted layers). The respective resolutions and numbers of individual images are shown below the graphics [4]

In the extracted features from the previous layers (feature maps), patterns are recognized by fully connected layers (FC). Each of the fully connected layers consists of nodes (neurons) that are connected to the nodes of the next layer. Each neuron has an activation function that determines whether it passes on its information or not. For this purpose, a weighted sum is formed from the connected and activated neurons (of the previous layer). Each connection has its own weight. A constant (bias) is added to this weighted sum. This sum forms the argument of the activation function. A ReLU (Rectified Linear Unit - rectangular function) is often used. These weights and constants are initialized with random values when the network is created. In a training process, both the weights and constants as well as the values of the filter kernels (shared weights) in the convolution layers are adjusted. Depending on the network model, the number of parameters that can be adjusted ranges from a few million (focus: speed, mobile applications) to over a billion (focus: accuracy). There is an almost linear relationship between the number of trainable parameters and the required training data [5].

A large number of different CNNs are already available today for image classification and feature extraction. These are usually trained, evaluated and compared using large, public data sets (ImageNet, Microsoft COCO, CIFAR 10/100, ...). These data sets mostly comprise images of everyday objects based on digital color photographs. The data-resulting CNNs do not recognize X-ray images of components and assemblies as required for the purpose of this investigation. However, they offer a variety of image filter operators that can also be applied to X-ray images with the necessary adaptations.

In addition to image classification, object detection plays a major role. Within the object detectors, features (feature maps) are extracted from the image data using a CNN (backbone). In contrast to classification, these features now run through additional networks with which RoI can be detected. As this can result in a very high number of potential regions, these are reduced by so-called pooling. There are often overlaps in the region proposals, so that several object boundaries are proposed for one object. These can be reduced using special methods. In addition to predicting the correct object class (classification), the correct prediction of the object boundaries (regression) is an essential task that is ensured by an object detector.

4 Transfer learning

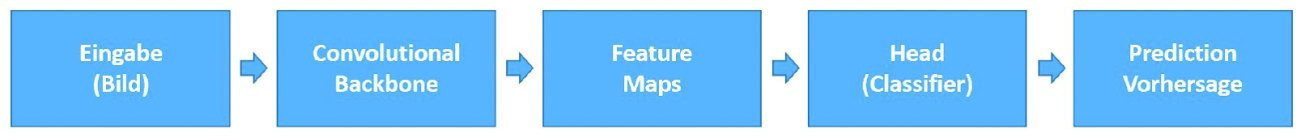

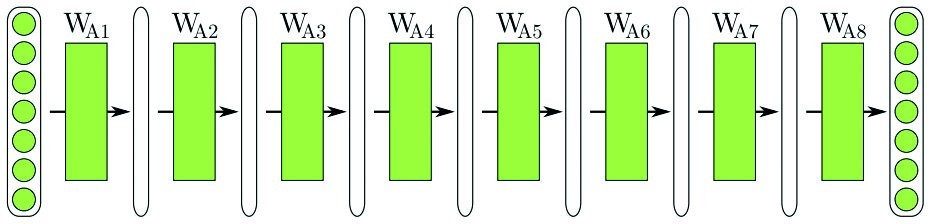

The image classification process using CNN can be visualized in a compact form as shown in Figure 4. The model consists of the so-called backbone and the head. The backbone generates features from the input data by applying filters and creates feature maps. These are classified in the head. In a newly initialized neural network, the filters are initially very unspecific and are refined and optimized during training. However, reinitializing a CNN is very time-consuming and computationally intensive and requires a very large number of annotated data sets, i.e. several hundred images per object class. In this context, annotation means linking an image with the corresponding class label.

Fig. 4: Image classification process

Fig. 4: Image classification process

The creation of a data set is generally regarded as the most complex and expensive process. However, the effort can be reduced by transferring the knowledge of a pre-trained neural network for solving a task to a new task or application. Transfer learning is basically the same as classification, whereby an already trained model is modified and further optimized.

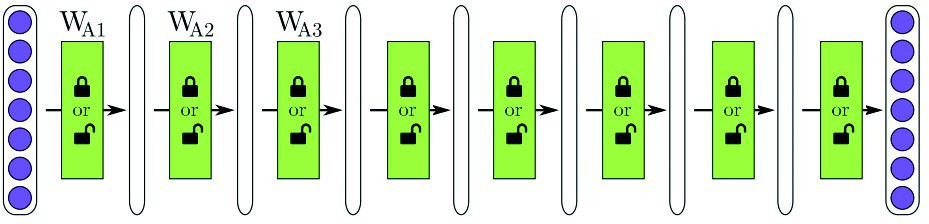

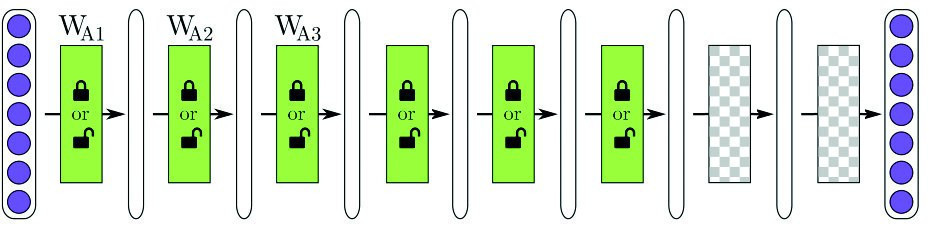

Different modification scenarios are available for transfer learning and follow the following scheme:

- Selection of a base network

- Copying the first n layers (filter kernel)

- Training the network with the target data set

The configuration of the target network (choice of base network, number of transferred or reinitialized layers) is freely configurable and is left to the user. However, the parameters mentioned depend on the size of the data set, the similarity to the source domain and the application and must usually be determined experimentally. It is generally recommended, especially for very small data sets, to replace only the last layer with a linear classifier [6].

(a) Starting point: Pre-trained network for a specific task

(a) Starting point: Pre-trained network for a specific task

(b) Scenario 1: The pre-trained network architecture is completely transferred. The individual layers can be optimized during training

(b) Scenario 1: The pre-trained network architecture is completely transferred. The individual layers can be optimized during training

(c) Scenario 2: The first n layers are transferred and the last two are initialized with random values. The transferred layers can be trained, the newly initialized ones must be trained

(c) Scenario 2: The first n layers are transferred and the last two are initialized with random values. The transferred layers can be trained, the newly initialized ones must be trained

![(d) Szenario 3: Das Netzwerk wird transferiert, nach der dritten Schicht abgeschnitten und zwei neue Schichten angehängt, nach [6] (d) Szenario 3: Das Netzwerk wird transferiert, nach der dritten Schicht abgeschnitten und zwei neue Schichten angehängt, nach [6]](/images/stories/Abo-2022-10/plus-2022-10-1016.jpg) (d) Scenario 3: The network is transferred, cut off after the third layer and two new layers are added, according to [6].

(d) Scenario 3: The network is transferred, cut off after the third layer and two new layers are added, according to [6].

Literature

[1] K. Schmidt et al: Enhanced X-Ray Inspection of Solder Joints in SMT Electronics Production using Convolutional Neural Networks, 2020 IEEE 26th Int. Symp. Des. Technol. Electron. Packag. SIITME 2020 - Conf. Proc., 2020, 26-31, doi: 10.1109/SIITME50350.2020.9292292

[2] Artificial intelligence - what is AI anyway?, 2022. https://www.optadata.at/journal/ki-begriffsdefinitionen/

[3] Z. Zou; Z. Shi; Y. Guo; J. Ye: Object Detection in 20 Years: A Survey, 2019, 1-39, 2019, [Online], Available: http://arxiv.org/abs/1905.05055

[4] PlotNeuralNet, https://github.com/HarisIqbal88/PlotNeuralNet

[5] M. Haldar: How much training data do you need?, https://malay-haldar.medium.com/how-much-training-data-do-you-need-da8ec091e956

[6] J. Yosinski; J. Clune; Y. Bengio; H. Lipson: How transferable are features in deep neural networks?, Adv. Neural Inf. Process. Syst., vol. 4, no. January, 2014, 3320-3328